Elaine (Yi Hui) Aw

Tech Ninja

Jan - Mar 2018

Selected for a Front-End Web Developer programme

These are my completed assignments for the 3 months programme: codepen link

Selected for a Front-End Web Developer programme

These are my completed assignments for the 3 months programme: codepen link

October 2017

This portfolio site is a Udacity final project for the "Intro to HTML and CSS" class:

https://www.udacity.com/course/intro-to-html-and-css--ud304

We were supposed replicate the following site based on what we have learnt: https://storage.googleapis.com/supplemental_media/udacityu/3158088581/design-mockup-portfolio.pdf

You are seeing the working version of this site now at this page.

It is overall a simple implementation if you follow the video, many thanks to the awesome instructors and examples. I took 3 days instead of the stipulated 3 weeks to finish this site, with most time spent on putting together portfolio projects to showcase.

This is a free online course, feel free to try it too.

This portfolio site is a Udacity final project for the "Intro to HTML and CSS" class:

https://www.udacity.com/course/intro-to-html-and-css--ud304

We were supposed replicate the following site based on what we have learnt: https://storage.googleapis.com/supplemental_media/udacityu/3158088581/design-mockup-portfolio.pdf

You are seeing the working version of this site now at this page.

It is overall a simple implementation if you follow the video, many thanks to the awesome instructors and examples. I took 3 days instead of the stipulated 3 weeks to finish this site, with most time spent on putting together portfolio projects to showcase.

This is a free online course, feel free to try it too.

June 2017

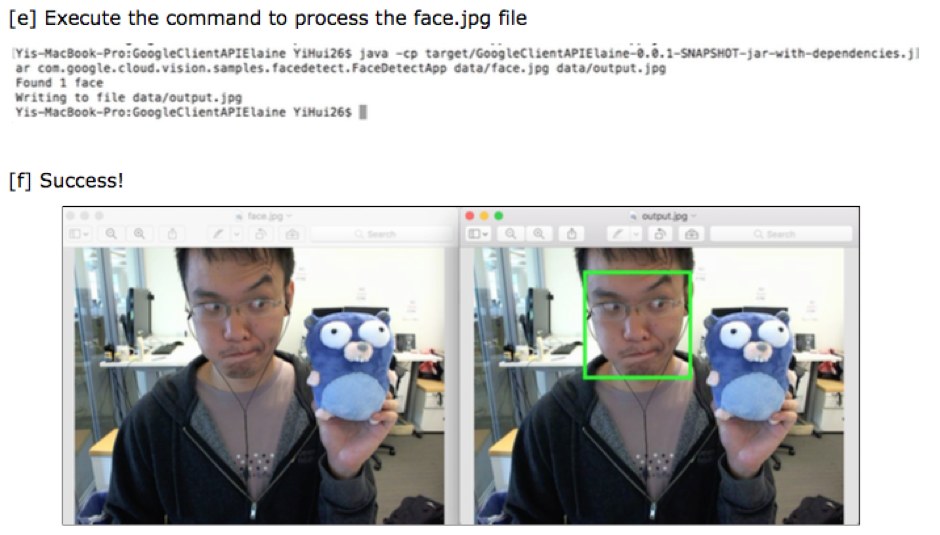

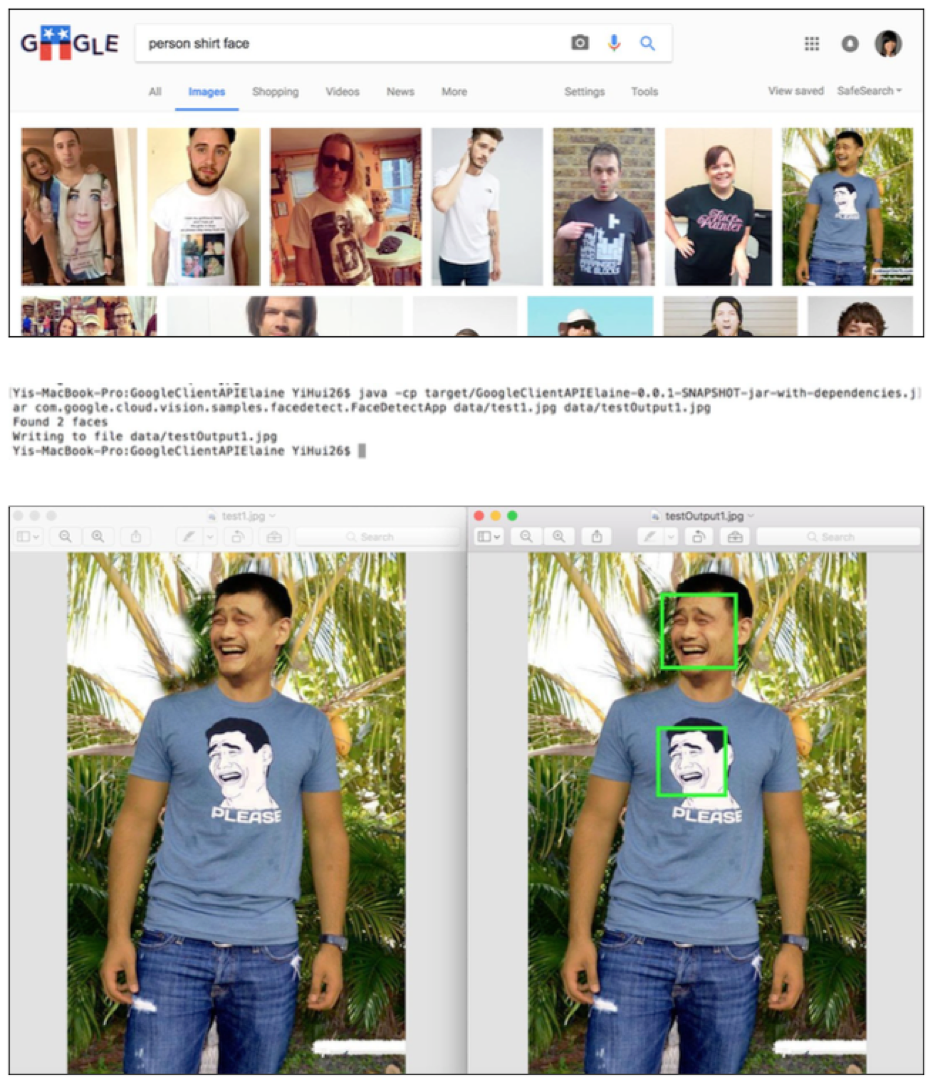

In this assignment, I set up a local environment to perform face detection with Google Cloud Platform's Vision API. Utilizing node.js was pretty straightforward. Setting up in Java took up much more time.

To set up in Java, I to have a Service Account Key, and installed Maven plugin + Google plugin on Eclipse to get the program to run.

In this assignment, I set up a local environment to perform face detection with Google Cloud Platform's Vision API. Utilizing node.js was pretty straightforward. Setting up in Java took up much more time.

To set up in Java, I to have a Service Account Key, and installed Maven plugin + Google plugin on Eclipse to get the program to run.

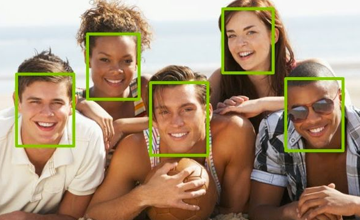

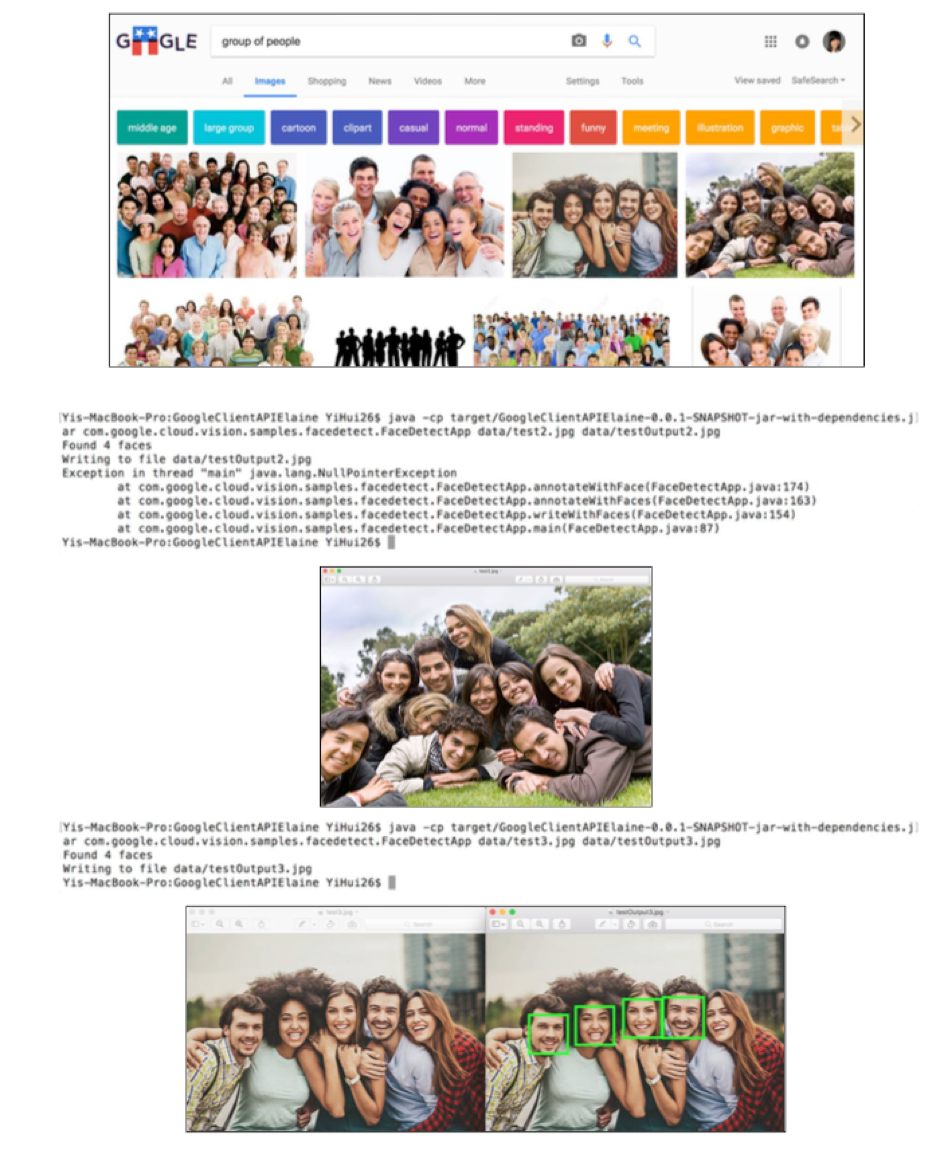

What I find more interesting is to test how well Google's face detection is working currently. The following screen capture shows my results:

[1] Can Google Vision API differentiate a real face with a printed face? Nope.

What I find more interesting is to test how well Google's face detection is working currently. The following screen capture shows my results:

[1] Can Google Vision API differentiate a real face with a printed face? Nope.

[2] Is there a limit to the number of faces that can be detected? Seems so.

[2] Is there a limit to the number of faces that can be detected? Seems so.

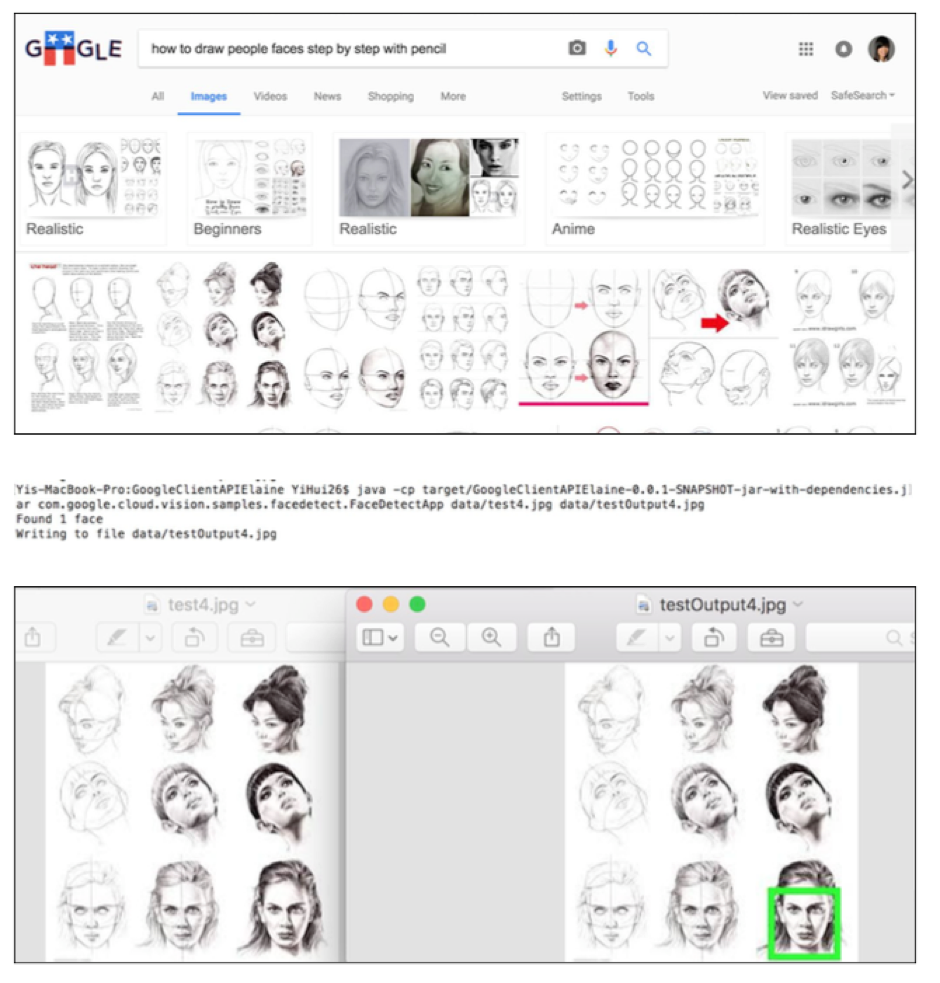

[3] To what stage is a face, a face?

[3] To what stage is a face, a face?

June 2017

The program was on Cloudera, accessed by Oracle VM's VirtualBox. The codes were built in Java, and the compiled codes ran on Hadoop to test the accuracy of Pi.

Steps:

1. Generate numbers from GenerateLargeRandomNumbers.java program.

2. PiCalculation.java program does map reduce methods to calculate Pi.

The general idea is that if you have a circle in a square and you throw darts randomly at the combination, you will have enough darts that would hit inside the circle, and outside the circle but in the square.

Since (Area of circle = pi * r^2 ) , (Area of square = 2r*2r = 4r^2), therefore (Area of circle/Area of Square = 1/4 pi).

With that in mind, (count of darts in the circle / total count of darts in the square)* 4 = estimated pi.

The program was on Cloudera, accessed by Oracle VM's VirtualBox. The codes were built in Java, and the compiled codes ran on Hadoop to test the accuracy of Pi.

Steps:

1. Generate numbers from GenerateLargeRandomNumbers.java program.

2. PiCalculation.java program does map reduce methods to calculate Pi.

The general idea is that if you have a circle in a square and you throw darts randomly at the combination, you will have enough darts that would hit inside the circle, and outside the circle but in the square.

Since (Area of circle = pi * r^2 ) , (Area of square = 2r*2r = 4r^2), therefore (Area of circle/Area of Square = 1/4 pi).

With that in mind, (count of darts in the circle / total count of darts in the square)* 4 = estimated pi.

February 2017

This is an application built with node.js, accessing the Philips Hue API to control the lights to switch on/off, blink etc.

This is an application built with node.js, accessing the Philips Hue API to control the lights to switch on/off, blink etc.

February 2017

This is a mobile calculator application built on Android.

The program logic is at MainActivity.java. The calculation expression logic leverages an existing exp4J library.

This is a mobile calculator application built on Android.

The program logic is at MainActivity.java. The calculation expression logic leverages an existing exp4J library.

Summer 2017 (May-Aug)

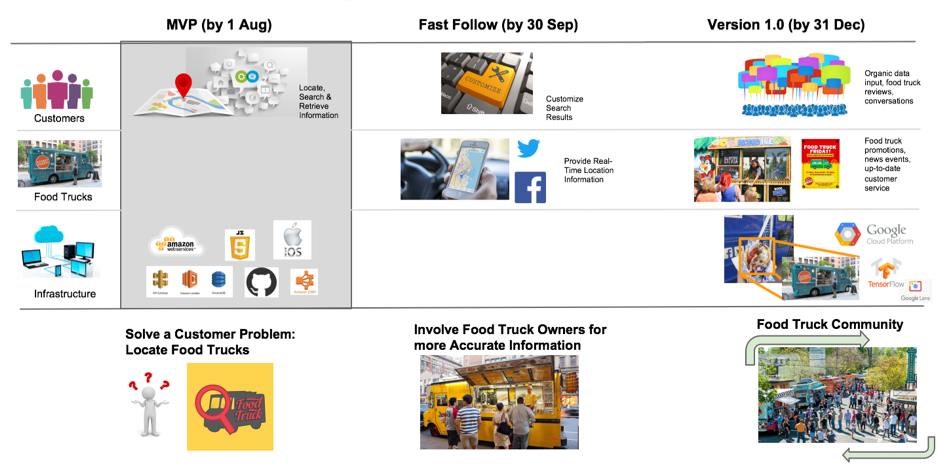

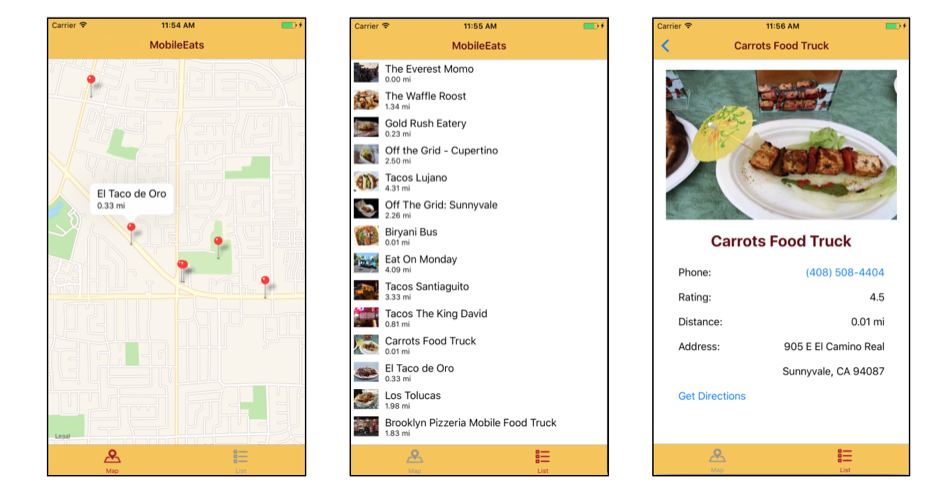

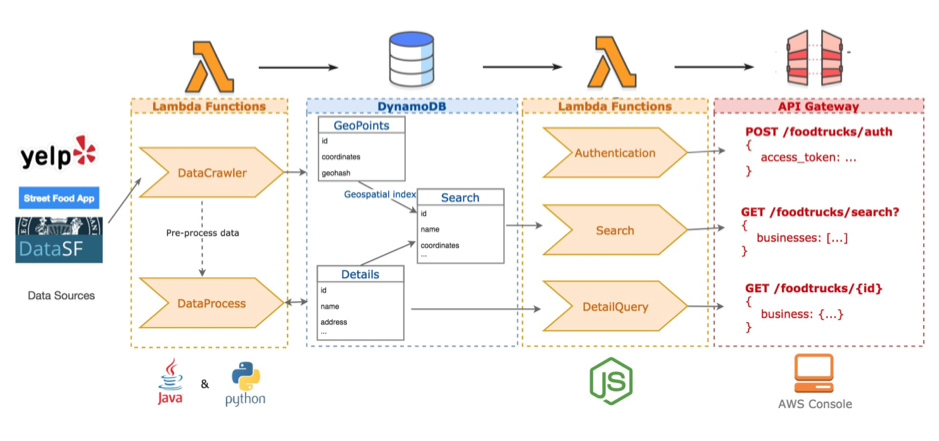

In this 6-people group project, we were tasked to create something that utilizes cloud technology.

Business Problem: My group loves food and we thought that there is a business opportunity for food trucks. Currently, consumers know that there are food trucks around, but as it is mobile, it gets cumbersome to keep track of the location of those trucks everyday.

Solution: The vision of our app has three stages. First: Enable consumers to find food trucks. This can be done by levergaing on existing APIs like Yelp's API. Second: Enable food truck owners to update their business pages on our platform. They could update it manually, but ideally, it should be automated to keep the information more up-to-date. Potential sources of information could come from crawling through business pages on twitter/facebook, or pick up location updates from the business mobile devices assigned to their truck drivers. Third: Develop a community for food truck lovers and drivers. Having social interaction would encourage engagement, and potentially lead to community activities like gathering favorite food trucks at popular events.

In this 6-people group project, we were tasked to create something that utilizes cloud technology.

Business Problem: My group loves food and we thought that there is a business opportunity for food trucks. Currently, consumers know that there are food trucks around, but as it is mobile, it gets cumbersome to keep track of the location of those trucks everyday.

Solution: The vision of our app has three stages. First: Enable consumers to find food trucks. This can be done by levergaing on existing APIs like Yelp's API. Second: Enable food truck owners to update their business pages on our platform. They could update it manually, but ideally, it should be automated to keep the information more up-to-date. Potential sources of information could come from crawling through business pages on twitter/facebook, or pick up location updates from the business mobile devices assigned to their truck drivers. Third: Develop a community for food truck lovers and drivers. Having social interaction would encourage engagement, and potentially lead to community activities like gathering favorite food trucks at popular events.

Technology Used: iOS, AWS (API Gateway, Lambda Functions, DynamoDB).

Technology Used: iOS, AWS (API Gateway, Lambda Functions, DynamoDB).

Areas I Worked On: Team Management, Product Management (roadmap planning and execution), Development of Lambda Functions in Node.js for DataQuery, Configuration of API Gateway, and Step-by-step documentation write-up.

Areas I Worked On: Team Management, Product Management (roadmap planning and execution), Development of Lambda Functions in Node.js for DataQuery, Configuration of API Gateway, and Step-by-step documentation write-up.

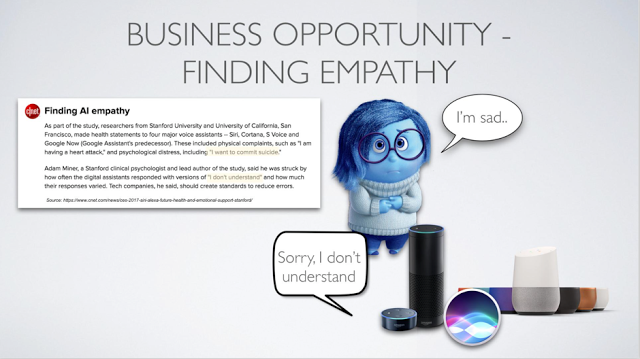

Spring 2017 (Jan-May)

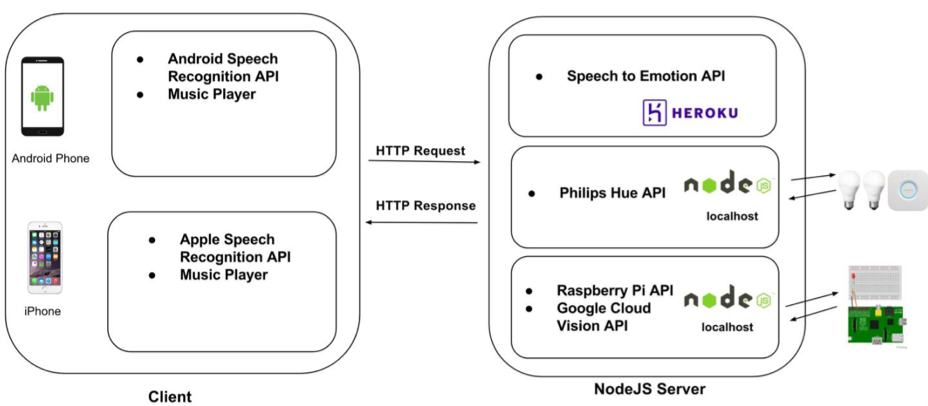

In this 8-people group project, we were tasked to create something that links up "smart devices" to our mobile phones.

Business Problem: My group searched for a valid problem to solve, and we found that current smart devices that uses NLP (i.e. Siri, Cortana, Google Now) did not recognize the context of emotions. This is backed up by researches (article) who found out that when health complaints that consists of psychological distress like "I want to commit suicide" are made to personal assistances, they receive responses that are varied versions of "I don't understand".

Solution: Create an app that would take in emotion input to output appropriate responses. For our prototype, we took in input from 3 sources: 1. camera image -> facial emotion recognition, 2. voice-to-text -> emotion recognition, and 3. emoticon choice -> emotion recogniton. Out output would be in a form of music, accompanied with effects from mood lights. Potentially, the app can be further developed after the MVP to learn and respond in ways that are customized to each individual.

Technology Used: Android, iOS, leveraging on open-source custom developed speech recognition API, speech to emotion API, Philips Hue API, Raspberri Pi API, and Google Cloud Vision API.

In this 8-people group project, we were tasked to create something that links up "smart devices" to our mobile phones.

Business Problem: My group searched for a valid problem to solve, and we found that current smart devices that uses NLP (i.e. Siri, Cortana, Google Now) did not recognize the context of emotions. This is backed up by researches (article) who found out that when health complaints that consists of psychological distress like "I want to commit suicide" are made to personal assistances, they receive responses that are varied versions of "I don't understand".

Solution: Create an app that would take in emotion input to output appropriate responses. For our prototype, we took in input from 3 sources: 1. camera image -> facial emotion recognition, 2. voice-to-text -> emotion recognition, and 3. emoticon choice -> emotion recogniton. Out output would be in a form of music, accompanied with effects from mood lights. Potentially, the app can be further developed after the MVP to learn and respond in ways that are customized to each individual.

Technology Used: Android, iOS, leveraging on open-source custom developed speech recognition API, speech to emotion API, Philips Hue API, Raspberri Pi API, and Google Cloud Vision API.

Areas I Worked On: Team Management, Product Management, and Development of Phillips Hue API using Node.js, hosted on localhost.

For more information, click on the link to this blog post to find out more details (including presentation slides and demo videos).

Areas I Worked On: Team Management, Product Management, and Development of Phillips Hue API using Node.js, hosted on localhost.

For more information, click on the link to this blog post to find out more details (including presentation slides and demo videos).

October 2016

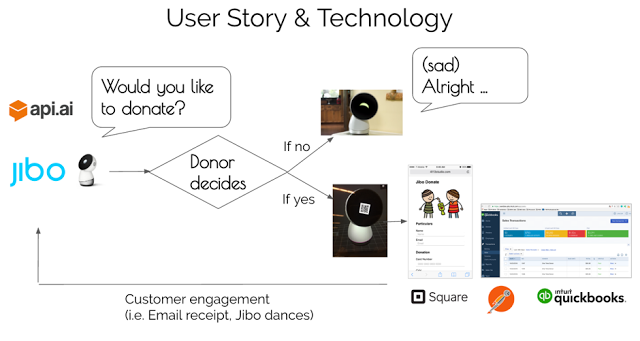

In Nov 2016, being excited to be relocated in the Valley to pursue my Masters' program, I decided to take part in Quickbooks Connect's hackathon with my schoomates. The topic was to create an app that saves a SME/non-profit organization's time or money by solving a unique non-profit problem.

Business Problem: One huge activity that non-profit orgnizations indulge in is to do fund raising. This is important for them to carry out their daily operations and to provide assistences for their beneficiaries. However, fund raising activites can be time consuming and largely dependant on help from volunteers.

Solution: Among the many API partners that presented on stage during the hackathon briefing, Jibo stood out for our team. Jibo is a social robot that is designed to be likable with it's animated movements to interact with others. We decided to test out a simple idea of setting Jibo up to ask for donations for non-profit organizations. The following is our process flow:

In Nov 2016, being excited to be relocated in the Valley to pursue my Masters' program, I decided to take part in Quickbooks Connect's hackathon with my schoomates. The topic was to create an app that saves a SME/non-profit organization's time or money by solving a unique non-profit problem.

Business Problem: One huge activity that non-profit orgnizations indulge in is to do fund raising. This is important for them to carry out their daily operations and to provide assistences for their beneficiaries. However, fund raising activites can be time consuming and largely dependant on help from volunteers.

Solution: Among the many API partners that presented on stage during the hackathon briefing, Jibo stood out for our team. Jibo is a social robot that is designed to be likable with it's animated movements to interact with others. We decided to test out a simple idea of setting Jibo up to ask for donations for non-profit organizations. The following is our process flow:

Technology Used: Jibo API (to program the robot's movements), API.AI (for natural language processing), Square API (to process donations), Postman Collections to test our APIs, and Quickbooks API (to store the donations in the non-profit organizations's accounting books).

Areas I Worked On: Designing the process flow, coded with Square API, Quickbooks API, and tested the API calls with Postman collections.

For more information, click on the link to this blog post to find out more details (including presentation slides and demo videos).

Technology Used: Jibo API (to program the robot's movements), API.AI (for natural language processing), Square API (to process donations), Postman Collections to test our APIs, and Quickbooks API (to store the donations in the non-profit organizations's accounting books).

Areas I Worked On: Designing the process flow, coded with Square API, Quickbooks API, and tested the API calls with Postman collections.

For more information, click on the link to this blog post to find out more details (including presentation slides and demo videos).

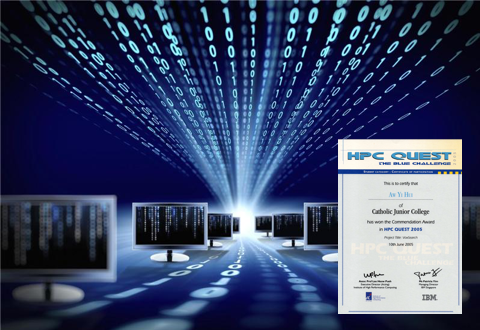

June 2005

In 2005, I took part in this competition with a team of 3 people during the June holidays.

Business Problem:

Our project idea is that if we can find text in word documents easily using CTRL+F, we should also be able to find words in libraries of voice files similarly.

Solution:

We utilized Fast Fourier Transformation algorithm to apply to voice files to find words and their location in audio books that sits in audio libraries. This huge computation means we had to leverage on super computers or parallel computers. My team of 3 borrowed laptops from my school to create our parallel computing cluster. We built our prototype using C++ and ran our program on Linux Ubuntu. The results we presented was at >90% accuracy.

Areas I Worked On: Researching on Fast Fourier Transformation and building parts of it in C++.

In 2005, I took part in this competition with a team of 3 people during the June holidays.

Business Problem:

Our project idea is that if we can find text in word documents easily using CTRL+F, we should also be able to find words in libraries of voice files similarly.

Solution:

We utilized Fast Fourier Transformation algorithm to apply to voice files to find words and their location in audio books that sits in audio libraries. This huge computation means we had to leverage on super computers or parallel computers. My team of 3 borrowed laptops from my school to create our parallel computing cluster. We built our prototype using C++ and ran our program on Linux Ubuntu. The results we presented was at >90% accuracy.

Areas I Worked On: Researching on Fast Fourier Transformation and building parts of it in C++.

2003 - 2006

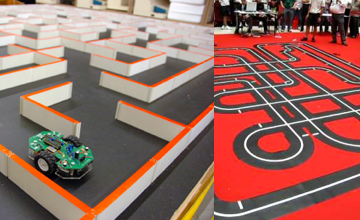

When I was in high school and junior college, I used to be very active in robotics competitions. In 2003-2004, I took part in micromouse competitions, and in 2005-2006, I took part in robo-racing competitions.

Micromouse (2003-2004): For micromouse competitions, the aim is to navigate a "mouse" to reach the center of the maze as fast as possible to attain the "cheese". In order to win this competition, there is a hardware and software component to it. For the hardware component, we would need to configure the mouse to sync up with the embedded systems, motors, and wheels to move in straight lines with appropriate distances, and turn exactly 90 degrees when it needs to. For the software component, I would set up an algorithm to increase the probability of getting the mouse to reach the cheese as soon as possible. We would not know how the maze looks like until the competition begins after we submit our mouse and algorithms.

When I was in high school and junior college, I used to be very active in robotics competitions. In 2003-2004, I took part in micromouse competitions, and in 2005-2006, I took part in robo-racing competitions.

Micromouse (2003-2004): For micromouse competitions, the aim is to navigate a "mouse" to reach the center of the maze as fast as possible to attain the "cheese". In order to win this competition, there is a hardware and software component to it. For the hardware component, we would need to configure the mouse to sync up with the embedded systems, motors, and wheels to move in straight lines with appropriate distances, and turn exactly 90 degrees when it needs to. For the software component, I would set up an algorithm to increase the probability of getting the mouse to reach the cheese as soon as possible. We would not know how the maze looks like until the competition begins after we submit our mouse and algorithms.

Roboracing (2005-2006): For robo-racing, the aim is to make the robo-racer race on the track as fast as possible. As the president of the robotics club, I led my club to clinch one of the most awards it received in school history.

Featured Article: http://cjc.edu.sg/web/?p=97

Roboracing (2005-2006): For robo-racing, the aim is to make the robo-racer race on the track as fast as possible. As the president of the robotics club, I led my club to clinch one of the most awards it received in school history.

Featured Article: http://cjc.edu.sg/web/?p=97

CMU-SV: Software Engineering Management

Fall 2016 (Aug-Dec)